October 2015 Issue

Read, Comment and Enjoy!

Join Translation Journal

Click on the Subscribe button below to receive regular updates.

Translation Project Quality Management: A Technological Perspective

- Details

- Written by Jiajun Qian

Abstract

This study examines the quality management of translation projects from a technological perspective. Computer-aided translation tools important to the quality of translation projects during the Plan Quality Management, Perform Quality Assurance and Control Quality were introduced. It is proposed that the technology-based quality management throughout the whole translation process could, to some extent, ensure the quality of translation projects.

Keywords: Translation project management; Computer-aided translation tools; Quality assurance

1. Introduction

In the era of globalization, the traditional mode of translation where the translators complete the translation tasks single-handedly has been replaced by the project-based translation activities that are flourishing both at home and abroad (Wang, Leng, & Cui, 2013). According to the PMBOKRGuide, the project management processes can be grouped into ten separate Knowledge Areas, which include Project Integration Management, Project Scope Management, Project Time Management, Project Cost Management, Project Quality Management, Project Human Resources Management, Project Communications Management, Project Risk Management, Project Procurement Management and Project Stakeholder Management (PMI, 2013, p. 59). Translation project quality management has been high on the agenda for today’s language service providers (LSPs).

2. Translation Quality

In terms of translation quality, more studies have focused on the translation quality from the theoretical perspective. House (1977, 1997, 2004, 2007) proposes a model based on pragmatic theories of language use. The translation should have a function which is equivalent to that of the original, and should also employ equivalent pragmatic means for achieving that function. Hönig (1998) holds that the translation quality assessment (TQA) can be either therapeutic (focusing on the reasons for the errors) or diagnostic (focusing on the readers’ expected response). Williams (2004) proposes an updated argumentation-centered model to assess the quality of translation. The above-mentioned studies tend to focus on the TQA from a linguistic perspective, ignoring the very nature of today’s translation activities. That is, translation activities are project-based that involve many complicated processes. Hence the need for computer-aided translation tools. There has been less research on translation quality from the technological perspective. Only a few studies concerning this field have been undertaken by scholars such as Xu and Guo (2012), and Wang and Zhang (2015). In their researches, they briefly introduce the CAT tools like SDL Trados, Déjà Vu and ApSIC Xbench with limited examples. Meanwhile, they only emphasize the translation quality assurance after translators’ completion of the task. Quality translation project is the result of good quality control and management throughout the whole process of the project (Wang & Wang, 2013, p. 94-95). There are many factors (Fig.1) determining the quality of translation projects such as PM, senior translators, communications between PM and teammates, team and client, CAT tools, management procedures, and supporters like project requester, proofreader, terminologist, and technician (Lv & Yan, 2014, p. 48-52). The present study explores the quality management throughout the whole process of translation project with specific examples from the technological perspective. It provides additional insights into the quality management of translation projects by using computer-aided translation tools.

Fig.1. Factors Determining the Quality of Translation Projects

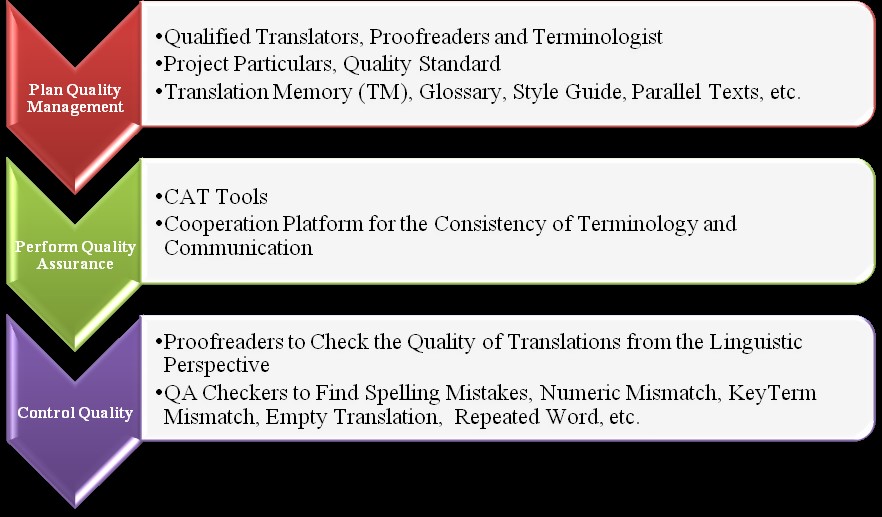

3. Translation Quality Management

Project Quality Management includes “the processes and activities of the performing organization that determine quality policies, objectives, and responsibilities so that the project will satisfy the needs for which it was undertaken” (PMI, 2013, p. 227). And the Project Quality Management includes the following three processes (Fig.2): Plan Quality Management, Perform Quality Assurance, and Control Quality (PMI, 2013, p. 61). Similarly, translation project quality management, the present study argues, should ensure the Plan Quality Management by the PM and terminologist, the Perform Quality Assurance by the PM and translators, and the Control Quality by the PM and proofreader.

Fig.2. Translation Project Quality Management Overview

3.1. Plan Quality Management

Plan Quality Management refers to “the process of identifying requirements and/or standards for the project and its deliverables and documenting how the project will demonstrate compliance with quality requirements” (PMI, 2013, p. 227). In the Plan Quality Management of Translation Projects, the PM, apart from selecting qualified translators and proofreader, and offering materials including project particulars, style guide, parallel texts and Quality Standard like LISA QA model, is supposed to provide translators with relevant translation memory and glossary.

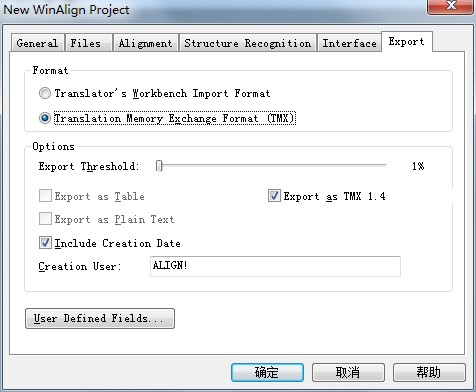

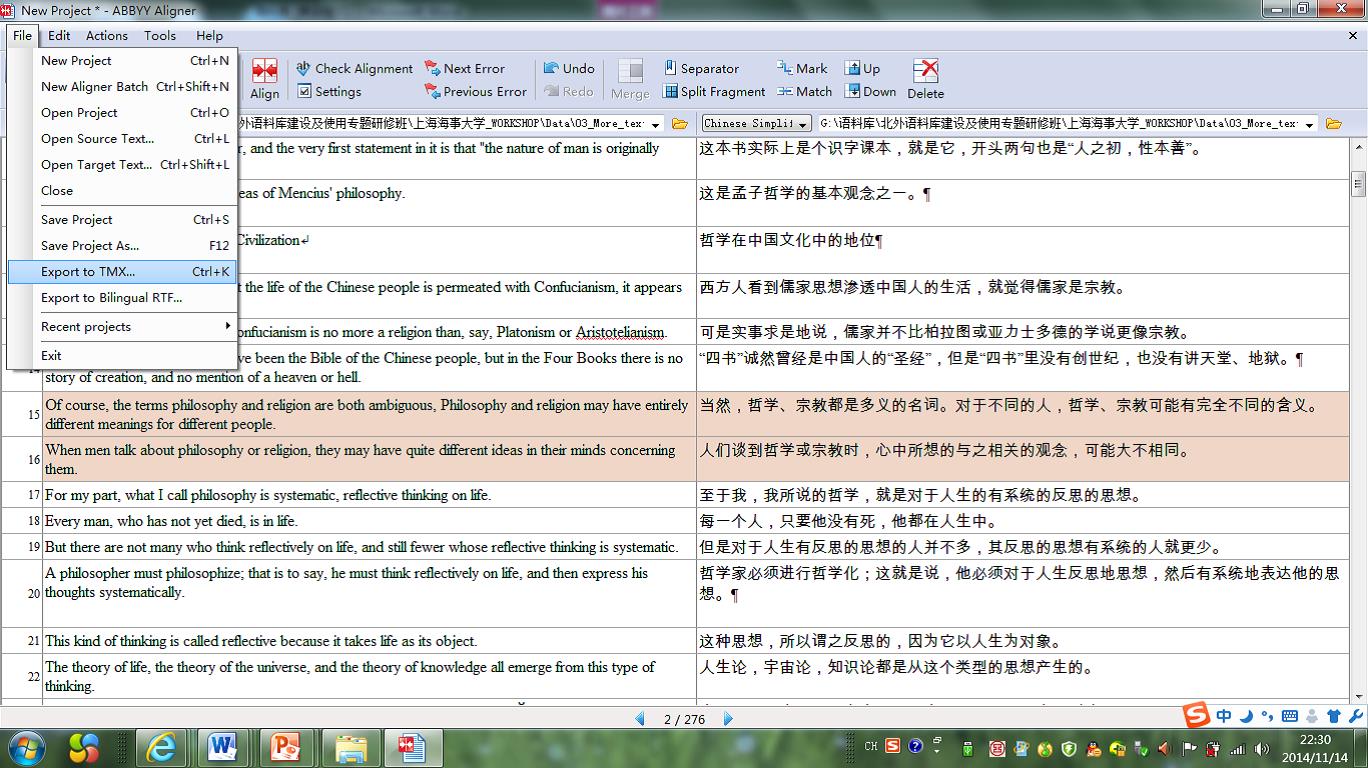

3.1.1. Tools for Alignment

If the translation memory is not available, the translators may use SDL Trados WinAlign (Fig.3) or ABBYY Aligner (Fig.4) to align translated documents and export to TMX.

Fig.3. Interface of SDL Trados WinAlign

Fig.4. Interface of ABBYY Aligner

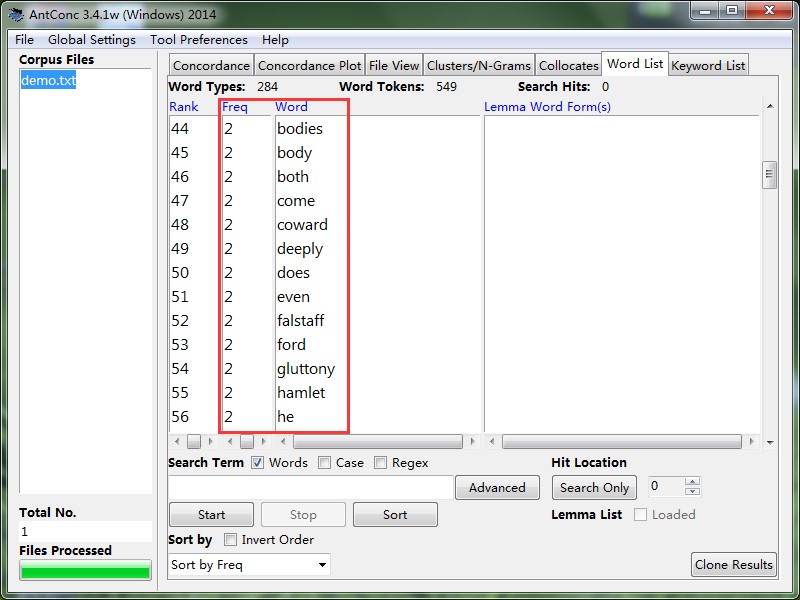

3.1.2. Tools for Frequency Counts

Besides providing glossary to the translators, the PM may also use AntConc to count the frequency of words in the text to be translated (Fig.5). The words that frequently occur in the text should be treated seriously as they may be the terminologies of the text. After identifying the frequently occurred words, the PM may collect these words, which will later be added along with their corresponding translations into the MultiTerm termbase by the terminologist.

Fig.5. Interface of AntConc3.4.1

3.2. Perform Quality Assurance

Perform Quality Assurance refers to “the process of auditing the quality requirements and the results from quality control measurements to ensure that appropriate quality standards and operational definitions are used” (PMI, 2013, p. 227).

3.2.1 Tools for Updating Terminologies and Communication

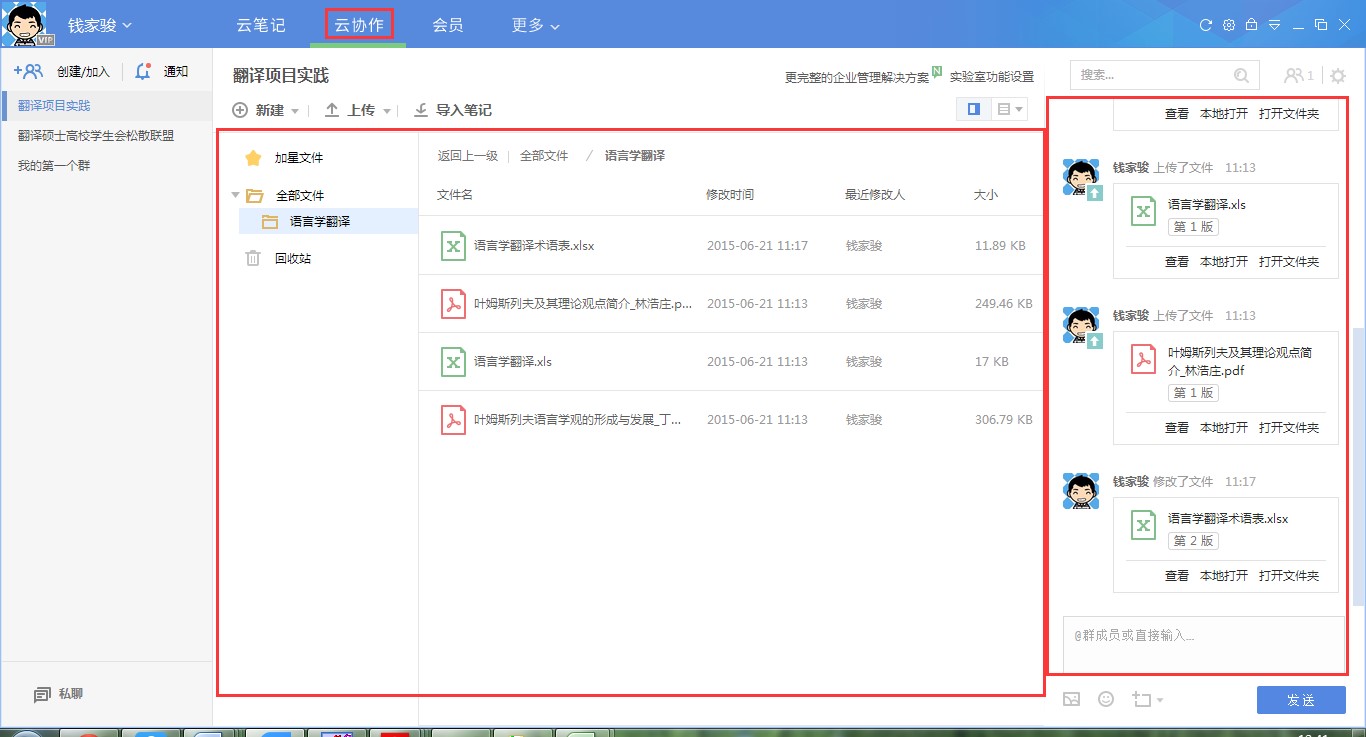

In the Perform Quality Assurance of translation projects, the PM is supposed to exchange ideas about the client’s needs and quality requirements with the translators in order to achieve a real-time quality control during the process of translation (Lv & Yan, 2014, p. 49). Youdao Cloud Cooperation is a good platform for the communication between the PM and translators. Liu (2014) uses EditGrid and QQ group to conduct terminology management. According to her research, the translators, if not agreeing upon the terminologies provided by the terminologist, may offer their own versions and suggestions via EditGrid, and then exchange ideas about the versions with the PM and terminologist via QQ group. This way of communication, the present study holds, is time and energy consuming, as the team members have to alternate between the two platforms. The Youdao Cloud Cooperation (Fig.6), however, is a platform that combines the functions of both the EditGrid and QQ group. In the middle of the interface, the translators may update their own versions of terminologies directly within the glossary, and the PM may upload some materials related to the translation projects for the translators’ reference. And in the right-hand column of the interface, team members may exchange ideas about the translation project in all respects.

Fig.6. Interface of Youdao Cloud Cooperation

3.2.2. Techniques for Improving Coherence of Translations

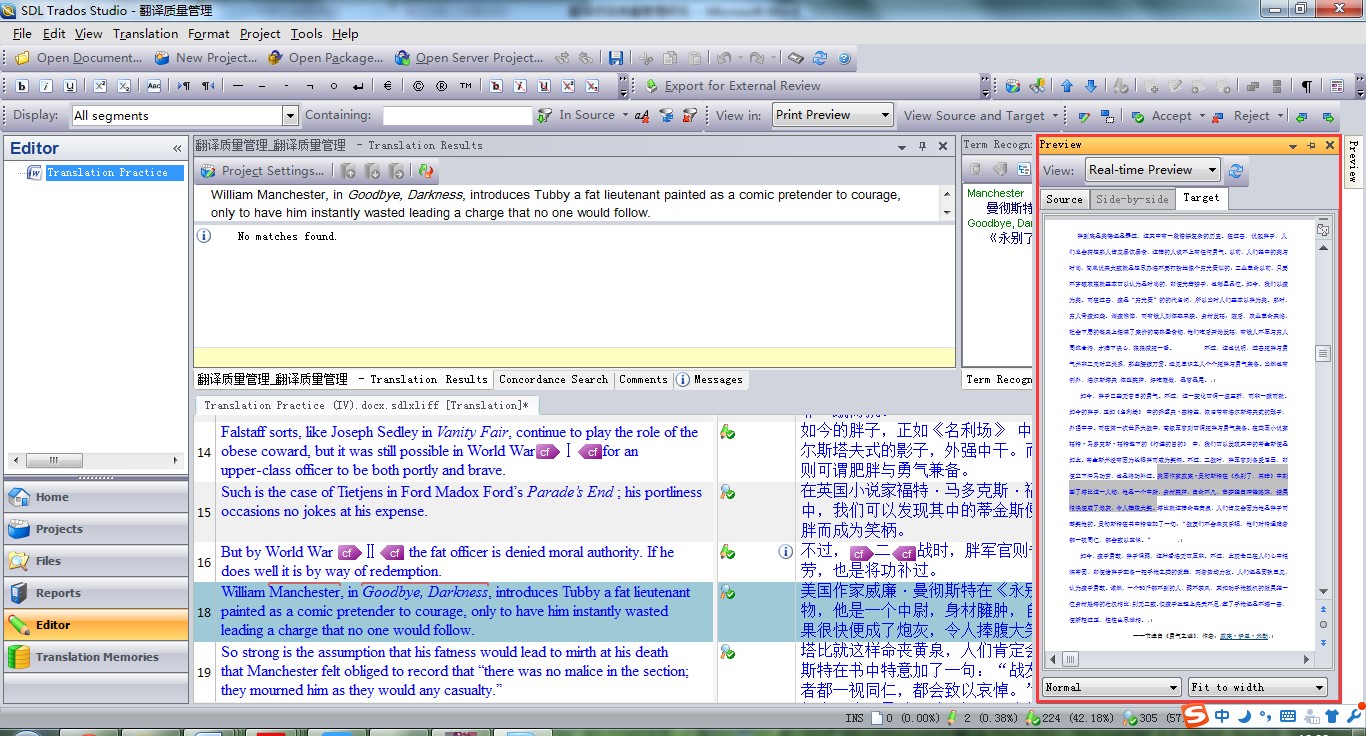

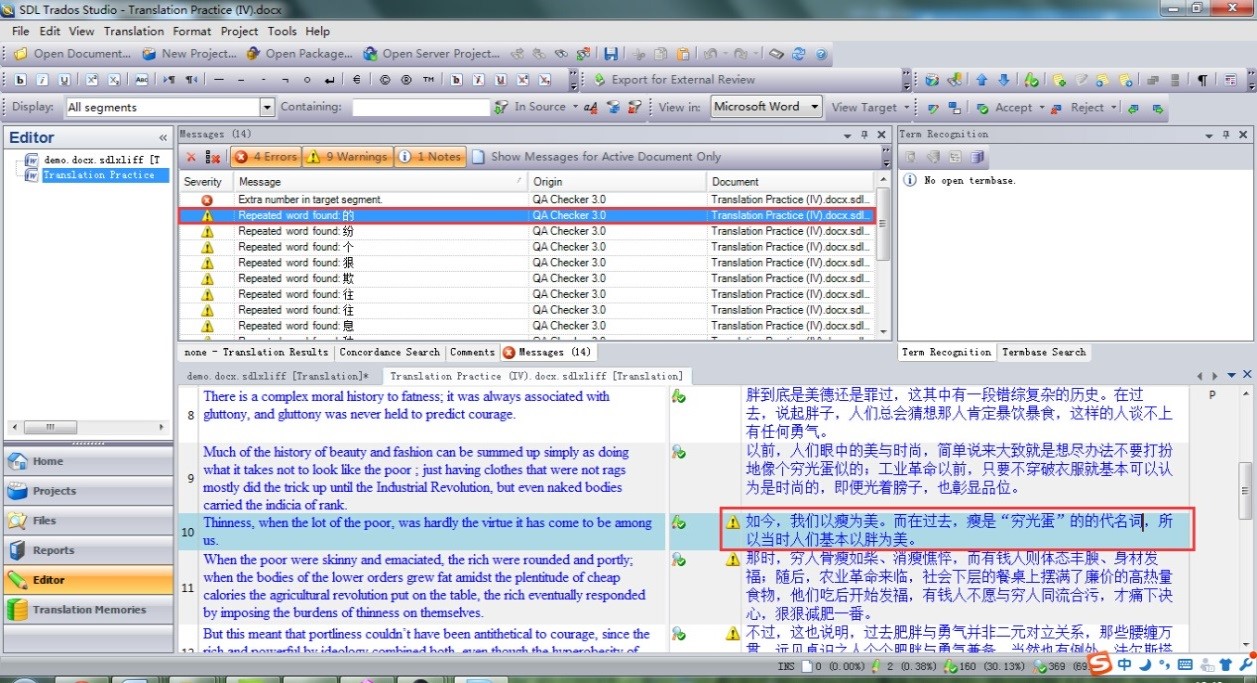

From the perspective of the translators, the quality of translation should be ensured without being influenced by the interface of CAT tools. Some scholars like Wang and Sun (2009) reveal the flaw in the interface of CAT, like SDL Trados Studio as its default translation unit is at the sentence level, thus hindering the translators from having a global picture of the text, and resulting in incoherent translations. But the translators may choose to merge the segments (Xu & Guo, 2015) or have a real-time preview of the source or target text (Fig.7) to make the translation more coherent (Wang, 2013). Otherwise, when the translation is done, the proofreader would, however, spend much more time making the translation more coherent and clearer, thus lowering the efficiency of the project.

Fig.7. Real-time Preview in SDL Trados Studio

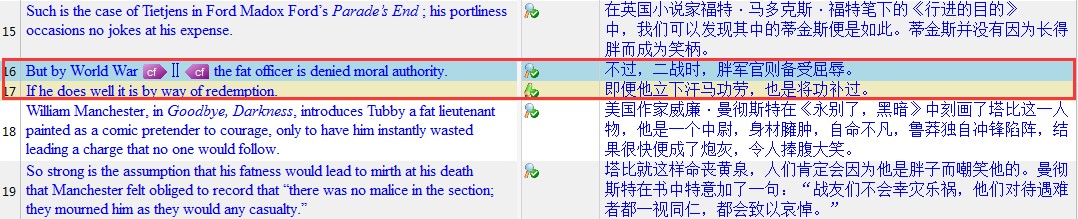

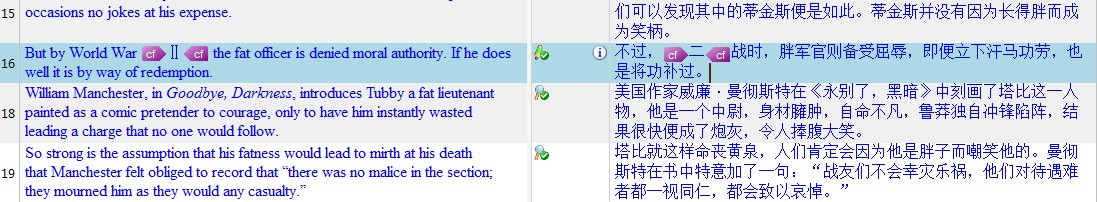

In the case of Segment 16 and 17 given below, the translator, probably influenced by the Editor interface of SDL Trados Studio 2011, has produced an incoherent translation, which would be better if the translator merge the two segments. The original (Fig.8) and improved (Fig.9) versions are as follows:

Fig.8. Translation Before Segments are Merged

Fig.9. Translation After Segments are Merged

3.3. Control Quality

Control Quality refers to “the process of monitoring and recording results of executing the quality activities to assess performance and recommend necessary changes” (PMI, 2013, p. 227). In translation project quality management, the proofreader will not only assess the translation from the linguistic perspective, but also use QA Checker to identify format or other mistakes that are not easy to find by humans.

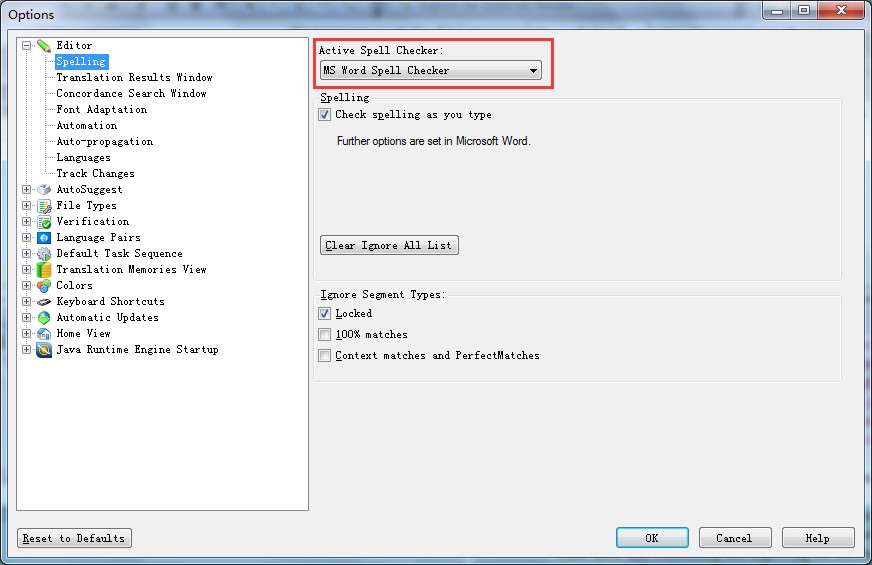

3.3.1. Spelling Mistake

If the translators don’t activate the Spell Checker in SDL Trados Studio 2011 during their translation process, the proofreader may choose to switch the default Hunspell Spell Checker to MS Word Spell Checker via “Tools—Options—Editor—Spelling” in order to check spelling mistakes (Fig.10). After the MS Word Spell Checker is activated, the misspelled words will be underlined in red (Fig.11).

Fig.10. MS Word Spell Checker

Fig.11. Misspelled Word Underlined in Red

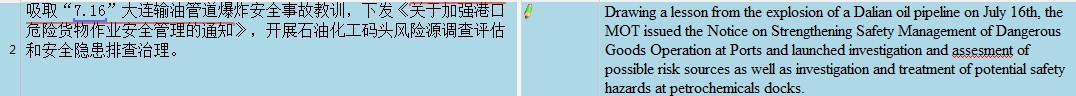

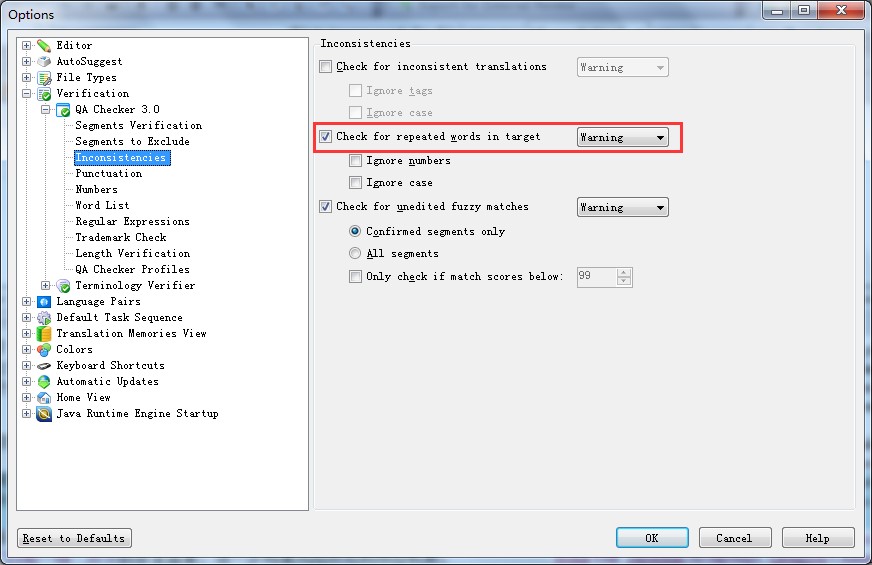

But there are some spelling mistakes that cannot be identified by MS Word Spell Checker. Repeated words like “的的” frequently occur in the translation, and are not easy for the proofreader alone to find within a limited amount of time. QA Checker in SDL Trados Studio 2011, however, will do the trick. The proofreader may tick “Check for repeated words in target” via “Tools—Options—Verification—Inconsistencies” (Fig.12). In the example given below (Fig. 13), the segment with repeated words “的的” is issued a Warning by QA Checker. But a fly in the ointment is that other segment like Segment 11 with repeated words like “狠狠” are also given a Warning, which is actually unnecessary.

Fig.12. Check for Repeated Words in Target

Fig.13. Verification Message for Repeated Words

3.3.2. Numeric Mismatch

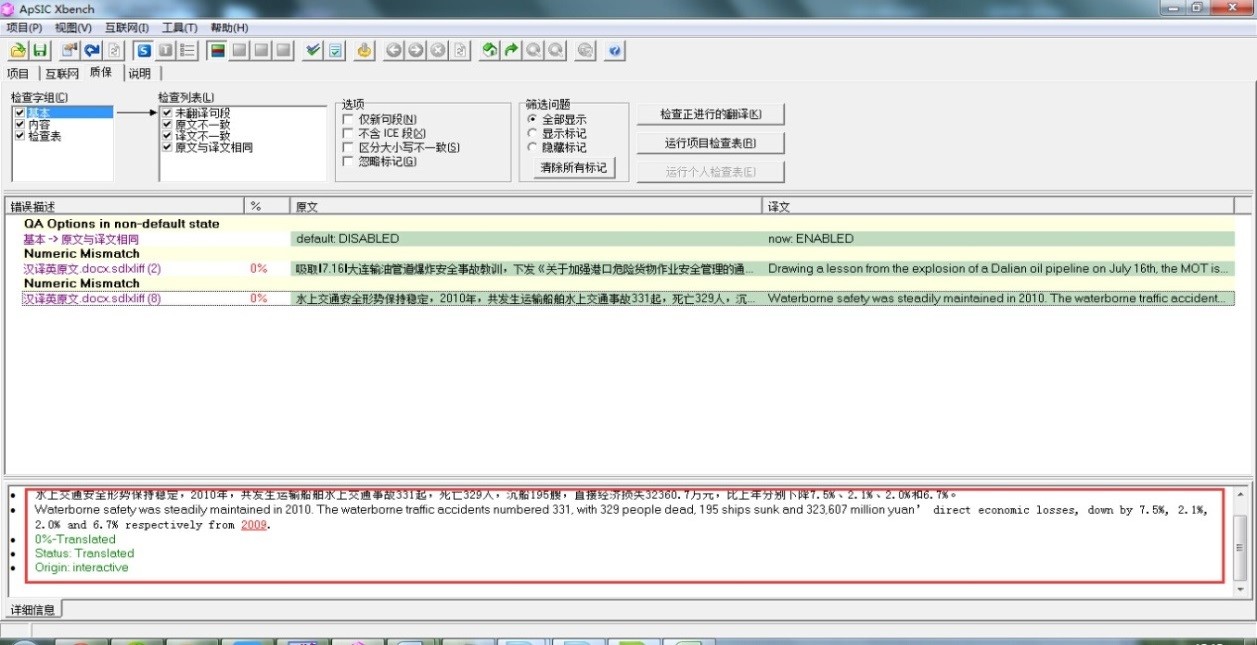

Numeric mismatch means the figure in the source text is not equivalent to that in the target text. QA Checker can easily identify numeric mismatch within a second that would otherwise be done with a large amount of time by humans. The present study explores the functions of QA Checker in finding numeric mismatch in SDL Trados QA Checker and ApSIC Xbench. Interestingly, the latter outperforms the former in identifying numeric mismatch, though both have some limitations.

In the interface of SDL Trados Studio 2011, the proofreader may verify the numeric mismatch via “Tools—Verify”, while in ApSIC Xbench, the proofreader may check the mistakes via “Project—Properties—Add XLIFF File—Tick Ongoing Translation—Check Ongoing Translation”.

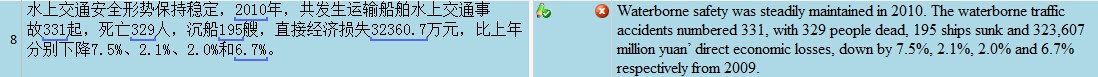

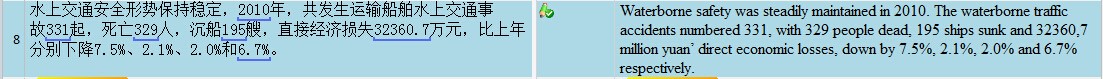

As the example shown below, the figure “323,607” in the target text of Segment 8 is regarded as an Error by QA Checker in SDL Trados Studio 2011 (Fig. 14), while in ApSIC Xbench, it isn’t. This means that QA Checker in SDL Trados Studio 2011 would only report no Error when the very figure of the target text is exactly the same as that in the source text. In other words, SDL Trados QA Checker, unlike ApSIC Xbench, would only check the figures in source and target texts, without taking into consideration the number, “million” for example, following them.

And QA Checkers in both SDL Trados Studio 2011 and ApSIC Xbench (Fig.16) regard the figure “2009” as redundant, which is actually not. In SDL Trados Studio 2011, if the figure “323,607” is changed into “32360,7”, and the figure “2009” was removed, the segment would then be deemed as “Correct” (Fig.15). That means both the two QA Checkers would regard the figure added by the translators according to the context as superfluous. The proofreader therefore is supposed to take a cautious attitude towards the judgement by the QA Checker.

Fig.14. Unjustified Numeric Mismatch in SDL Trados Studio 2011

Fig.15. Segment Proved Correct When the Figure is Revised Wrong

Fig.16. Unjustified Numeric Mismatch in ApSIC Xbench

3.3.3. KeyTerm Mismatch

Since the project-based translation is not conducted single-handedly, the terminologies may inevitably be inconsistent in the translations done by different translators even if the glossary is given. QA Checkers like QA Checker 3.0 in SDL Trados Studio 2011 and ApSIC Xbench would identify the KeyTerm mismatch for the proofreader automatically.

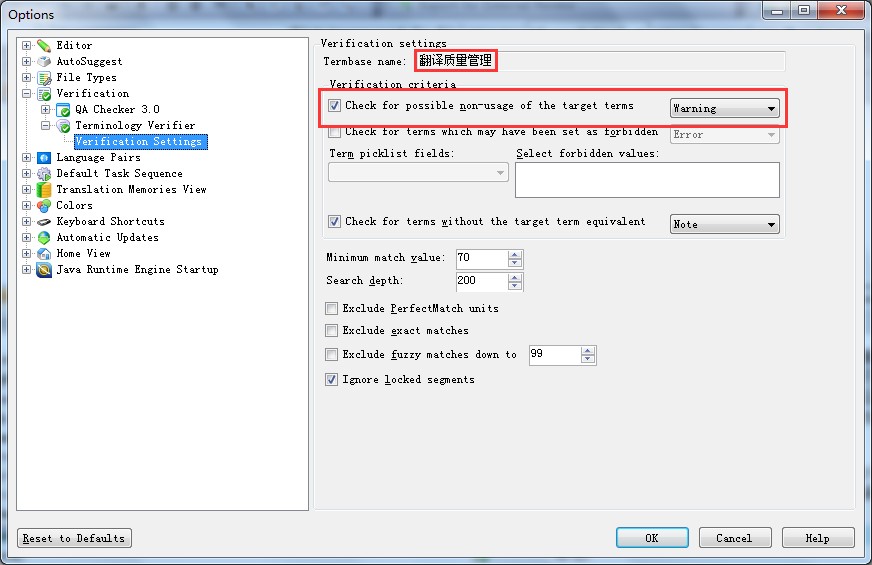

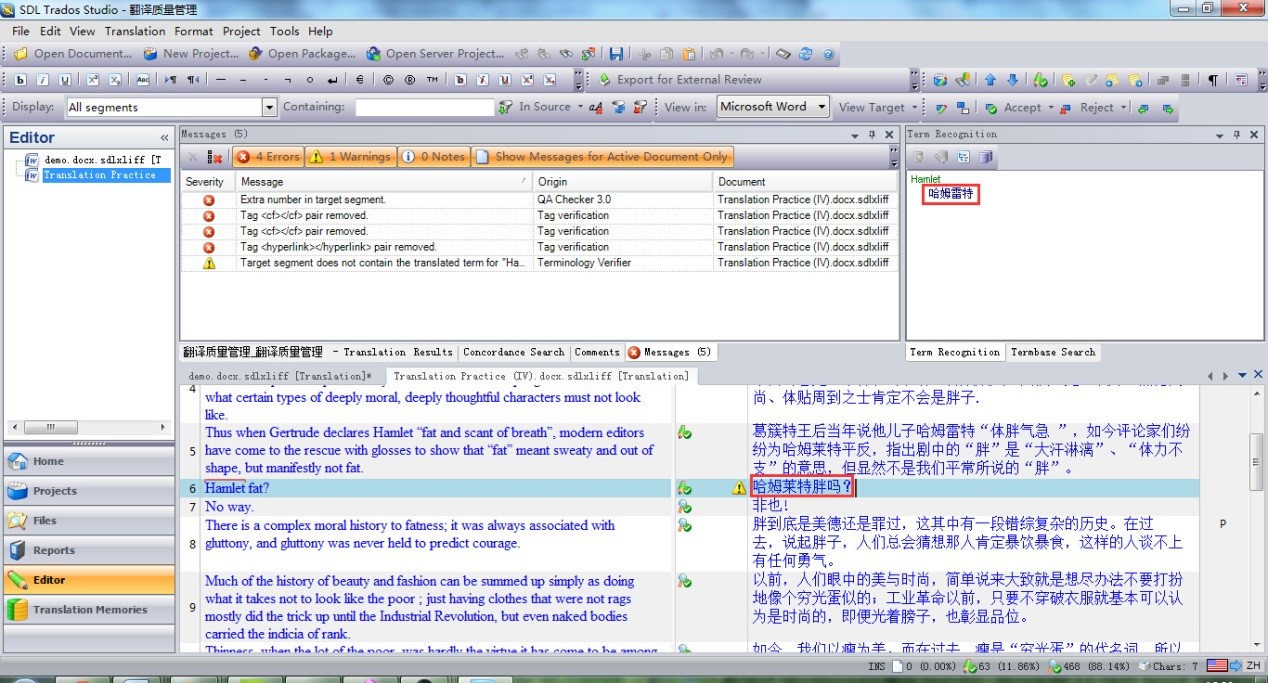

In SDL Trados Studio 2011, the proofreader is supposed to ensure the termbase concerning the project is added via “Tools—Options—Language Pairs—All Language Pairs--Termbases” and set the verification criteria (Fig.17) by ticking “Check for possible non-usage of the target terms” via “Tools—Options—Verification—Terminology Verifier—Verification Settings”.

Fig.17. Verification Settings in SDL Trados Studio 2011

Then the proofreader may verify the consistency of terminologies via “Tools—Verify”. Here is an example of terminology verification in SDL Trados Studio 2011 (Fig.18): Segment 8 is issued a Warning against the “哈姆莱特” in the target text as it is not the same as “哈姆雷特” in the termbase.

Fig.18. A Warning Against “哈姆莱特” in Segment 8

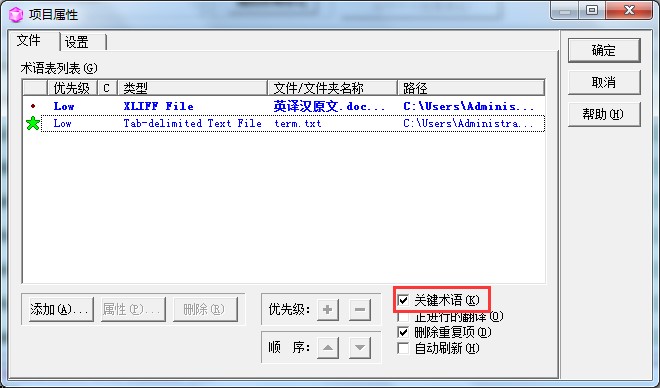

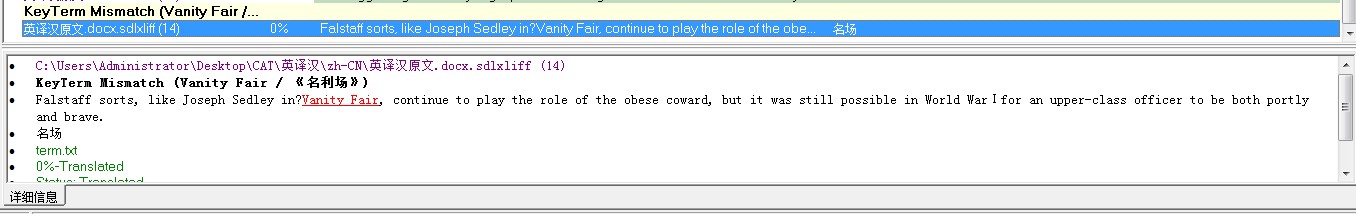

Similarly, in ApSIC Xbench, the proofreader may also check KeyTerm mismatch with its QA Checker via “Project—Properties—Add XLIFF File (Ongoing Translation) and Tab-delimited Text File (KeyTerm)—Check Ongoing Translation”. Please note thatthe Tab-delimited Text File shall be created by an Excel glossary saved as a Tab-delimited TXT (Fig.19). If there exists a mismatch between the target translation of terminology and that in the termbase, the QA Checker in ApSIC Xbench would issue a Warning (Fig.20).

Fig.19. Project Properties for KeyTerm in ApSIC Xbench

Fig.20. KeyTerm Mismatch in ApSIC Xbench

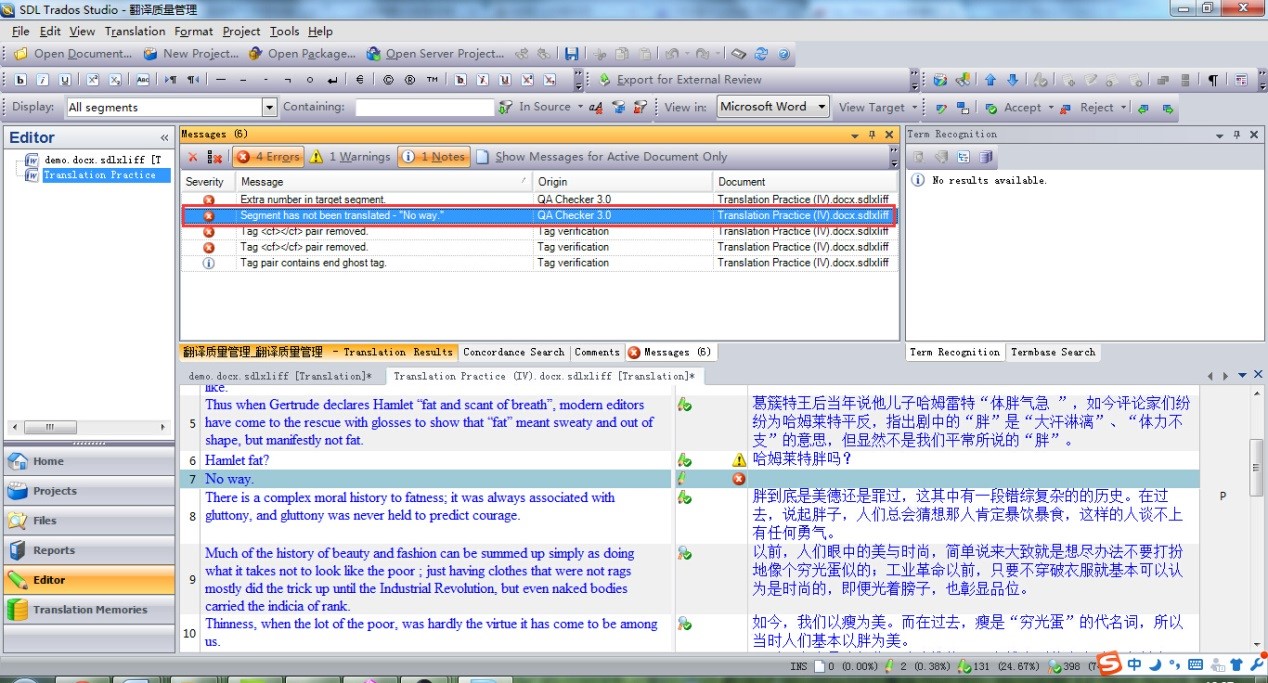

3.3.4. Forgotten and Empty Translation

Forgotten and empty translation means the segment that has not been translated. In SDL Trados Studio 2011, the proofreader may check empty translation via “Tools—Verify” (Fig.21). Similarly, in ApSIC Xbench, the proofreader may check the untranslated segments via “Project—Properties—Add XLIFF File—Tick Ongoing Translation—Check Ongoing Translation” (Fig.22). Segments that have not been translated would be issued an Error.

Fig.21. Untranslated Segment in SDL Trados Studio 2011

4. Conclusion

The study aims to explore the translation project quality management from the technological perspective. Examples were given concerning the tools for alignment, frequency counts and communication, techniques for improving the coherence of translation, like SDL Trados QA Checker and ApSIC Xbench.

The study shows that 1) a successful translation project is not the result of QA after the translation is done. Rather, it is the product of conscientious efforts made by all the team members throughout the process of translation project; 2) during the Plan Quality management, the translator is advised to use SDL Trados WinAlign to align the translated documents; the PM is suggested to use AntConc to count the frequency of words in the text to be translated; 3) during the Perform Quality Assurance, the team members may use Youdao Cloud Cooperation for updating terminologies and communication, merge segments or have a preview of the target text to make the translation more coherent; 4) during the Control Quality, the proofreader may use QA Checker in SDL Trados 2011 or ApSIC Xbench to check spelling mistake, numeric mismatch, KeyTerm mismatch and untranslated segments. The proofreader is supposed to be cautious about the judgment by QA Checker in SDL Trados 2011 in terms of numeric mismatch.

The study has theoretical implications for translation studies from the technological perspective and provides practical insights into the quality management of translation projects for today’s LSPs.

References

Hönig, H. (1998). Positions, power and practice: Functionalist approaches and translation quality assessment. Schäffner.

House, J. (1977). A Model for Translation Quality Assessment (2nd edition). Tûbingen: Narr.

House, J. (1997). Translation Quality Assessment: A Model Revisited. Tûbingen: Narr.

House, J. (2004). ‘Concepts and Methods of Translation Criticism. A Linguistic Perspective’, in Harald Kittel, Armin Paul Frank, Norbert Greiner, Theo Hermans, Werner Koller, José Lambert and Fritz Paul (eds) Übersetzung-Translation-Traduction. An International Encyclopedia of Translation Studies. Berlin: de Gruyter, 698-718.

House, J. (2007). ‘Translation Criticism: From Linguistic Description and Explanation to Social Evaluation’, in Marcella Bertuccelli Papi, Gloria Cappelli and Silvia Masi (eds) Lexical Complexity: Theoretical Assessment and Translational Perspectives, Pisa: Pisa University Press, 37-52.

Liu, W. (2014). Terminology Management in Team Translation—A Case Study Based on A Film Translation Project and A Website Localization Project. Unpublished Master’s thesis, Shanghai International Studies University.

Lv, L., & Yan L. L. (2014). Translation Project Management. Beijing, China: National Defense Industry Press.

Project Management Institute. (2013). A Guide to the Project Management Body of Knowledge (PMBOKRGuide) (Fifth Edition). PA: Project Management Institute, Inc.

Wang, H. S., & Zhang Y. X. (2015). Translation Quality Control: A Technological Perspective. Journal of Language and Literature Studies, (4), 1-5.

Wang, H. S., Leng, B. B., & Cui Q.L. (2013). Rethink the Map of Applied Translation Studies in Information Age. Shanghai Journal of Translators, (1), 7-13.

Wang, H. W., & Wang, H. S. (2013). A Practical Guide to Translation Project Management. Beijing, China: China Translation & Publishing Corporation.

Wang, Z. (2013). Context in Translation Memory System. Shanghai Journal of Translators, (1), 69-72.

Wang, Z., & Sun, D. Y. (2009). An Analysis of the Strengths and Weaknesses of Translation Memory in Translation Teaching. Foreign Language World, (2), 16-22.

Williams, M. (2004). Translation Quality Assessment: An Argumentation-Centered Approach. Ottawa: University of Ottawa Press.

Xu, B., & Guo, H. M. (2012). Translation Quality Control under the Environment of CAT. Shandong Foreign Language Teaching Journal, (5), 103-108.

Xu, B., & Guo, H. M. (2015). Non-technical Translation: A CAT-Based Practice. Chinese Translators Journal, (1), 71-76.

This article is supported by Innovation Program for Graduate Students of Shanghai Maritime University (Grant No.2015ycx013)